Platform

Platform Overview

The Work Operating System for AI-powered work

The Work Map

A live log of every job, task, subtask, and workflow inside the enterprise.

Work Intelligence

Find wasted potential, unlock hours, and know exactly where agents deliver impact.

Agent Navigator

Connect all agents, recommend the right one for each task, and capture the context to build new agents.

Agent ROI

Measure ROI based on actual work changes, not agent promises.

Work Architecture

Replaces static job architecture with a dynamic model for humans and agents that updates as roles shift.

Work Impacts

Shows how AI will change jobs and what skills your workforce needs.

Work Flow

Redesigns how work gets done and tracks every change automatically.

How to write better AI prompts at work: A practical guide for enterprise teams

Blog Post Body

Table of contents

Talk to a Work Strategist

See the Work Operating System in action and start re-engineering work for AI.

Subscribe to our newsletter

The latest insights on re-engineering work for AI

Most enterprise AI failures are not model failures. They are instruction failures.

AI tools are rolling out quickly across large organisations. Budgets are approved. Pilots are launched. Teams are encouraged to experiment.

Yet many leaders try AI once or twice, receive vague or generic outputs, and conclude the technology is not ready.

What you will learn in this guide:

- Why AI often feels unreliable in enterprise settings

- How prompting changes AI from a novelty into a dependable work tool

- A simple, repeatable structure for writing effective AI prompts at work

- How leaders can reduce risk and increase trust in AI-generated outputs

AI feels unreliable because instructions are unclear

AI produces weak outputs when instructions lack clarity, context, or structure.

Most employees interact with AI the same way they use a search engine. They enter short, loosely framed questions and expect precise, business-ready answers.

This approach almost guarantees inconsistent results.

AI systems do not understand:

- Your organisation’s strategy

- Your workforce constraints

- Your risk tolerance

- Your decision context

Unless that information is explicitly provided, the output defaults to generic responses.

In enterprise environments, this leads to a predictable pattern:

- AI is labelled inconsistent

- Trust erodes across teams

- Adoption stalls

The technology is not unreliable. The interaction model is.

Start directing AI with confidence.

Prompting is a core enterprise capability

Prompting is the skill of translating human intent into clear, structured direction for AI systems.

Despite the term "prompt engineering," this is not a technical discipline. It is a communication discipline.

Strong prompts consistently do four things:

-

Provide context about the organisation, problem, or audience

-

Assign a role or perspective for the AI to operate from

-

Define a specific task with a clear objective

-

Specify the desired output format or constraints

When these elements are present, AI outputs become:

- More predictable

- More relevant

- Easier to validate and reuse

When they are missing, AI feels like a novelty rather than a capability.

Why prompting matters for enterprise leaders

Weak prompts create operational risk in enterprise environments.

AI outputs increasingly influence:

- Workforce and skills strategies

- Investment and prioritisation decisions

- Executive communications

- Policy drafts and internal guidance

Poorly directed AI does not just waste time. It increases rework, creates confusion, and undermines confidence in human-AI collaboration.

Strong prompting enables leaders to:

- Improve decision visibility

- Reduce output variance

- Scale AI usage safely across teams

- Move from experimentation to managed transformation

Despite this, most organisations have not trained their workforce on how to prompt effectively.

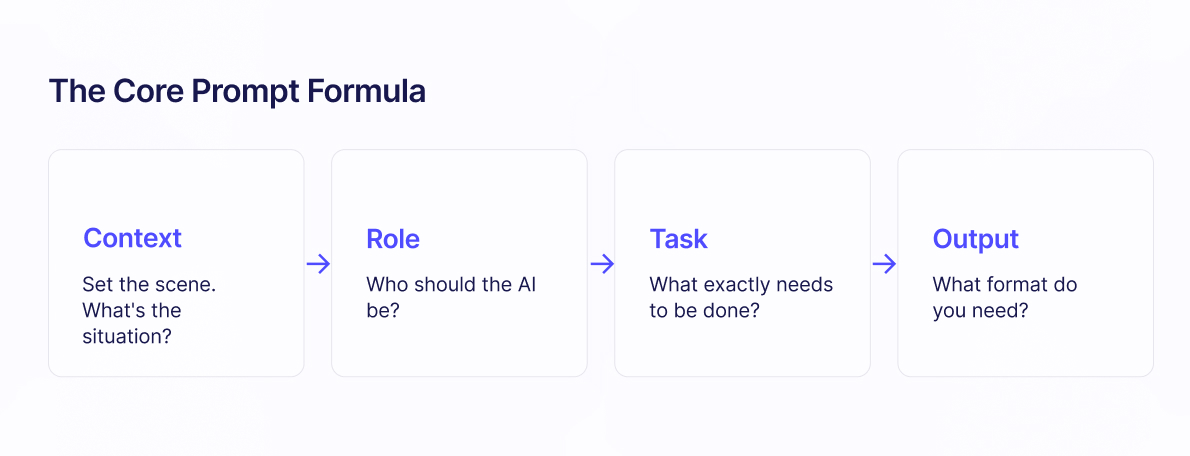

The four-part prompt checklist

Use this checklist to improve any AI prompt used at work.

Before submitting a prompt, confirm it includes:

-

Context: What does the AI need to know about the organisation, goal, or situation?

-

Role: Who should the AI act as (for example, HR advisor, strategy analyst, communications lead)?

-

Task: What specific outcome are you asking for?

-

Output: What format, length, tone, or constraints should the response follow?

This structure works across tools including ChatGPT, Claude, Gemini, and Copilot.

What enterprise teams learn in the definitive guide to prompt engineering

The guide teaches leaders how to make AI reliable in real enterprise conditions.

Inside the guide, readers learn:

- Why AI outputs vary and how to control that variance

- The mental model leaders should use when working with AI

- A repeatable prompt blueprint for enterprise use cases

- Real examples tied to workforce and organisational scenarios

- How to scale AI usage with confidence and governance

The guide also includes a one-page Prompt Cheat Sheet that teams can reference while working.

Frequently asked questions for enterprise leaders

Is prompt engineering only for technical teams?

No. Prompting is a leadership and communication skill, not a technical one.

Do better prompts really improve AI accuracy?

Yes. Clear context and constraints significantly improve relevance and consistency of outputs.

Can one prompt structure work across different AI tools?

Yes. While tools differ, structured prompts consistently improve outcomes across platforms.

Conclusion: AI Reflects the Direction It Is Given

Organisations getting value from AI are not using better models. They are giving better instructions.

They treat prompting as a core capability, not an experiment.

If your teams struggle to trust AI outputs, the issue is likely not the technology. It is the direction.

Talk to a Work Strategist

See the Work Operating System in action and start re-engineering work for AI.

Subscribe to our newsletter

The latest insights on re-engineering work for AI

.png)